Responsible AI: Definition, Importance & Practices

In today's era, with the increase in data and the use of AI systems for making significant business decisions, the importance of using artificial intelligence ethically and impartially is greater than ever.

In today's article, we will discuss Responsible AI and, specifically, explore in detail:

- What is Responsible AI and why is it important?

- The key principles of responsible AI

- The fundamental practices of implementing responsible AI

Before we delve deeper, let's start with a basic definition.

What is Responsible AI and Why Is It Important?

Responsible AI is a set of practices used to ensure that artificial intelligence is developed and applied by a company in a secure manner and from every ethical and legal standpoint.

The goal of Responsible AI is to use artificial intelligence responsibly by examining the possible impacts of AI systems, enhancing transparency, and reducing bias in the development and use of artificial intelligence.

By applying its basic principles, it can be ensured that these systems collect, store, and use data in a way that complies with data privacy laws and prioritizes security against any potential threats in cyberspace.

Additionally, the responsible use of AI has a positive impact on businesses themselves, as they can enhance their credibility and reputation, standing out from the competition.

Now that we've covered some basic aspects of Responsible AI, let's move on to the key principles.

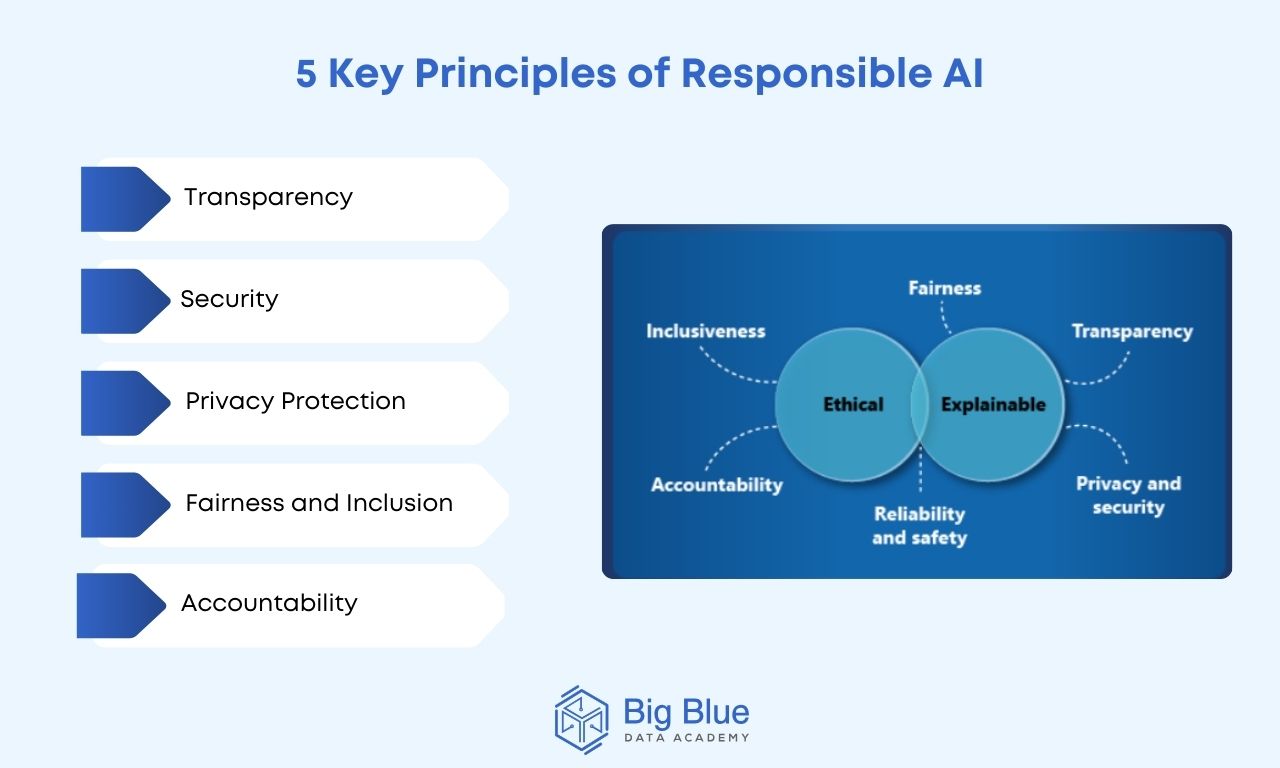

What Are the 5 Key Principles of Responsible AI?

The 5 key principles of Responsible AI are as follows:

Principle #1: Transparency

A fundamental principle of Responsible AI is transparency.

By making the operation of AI systems clear and understandable, recipients can better comprehend the decisions generated by artificial intelligence, thereby enhancing the sense of trust among users and stakeholders.

Principle #2: Security

Continuing, another important principle of Responsible AI is security.

AI systems need to be secure and resilient against potential threats that jeopardize the security of user data, such as data breaches, while also being able to recover promptly from such attacks.

Principle #3: Privacy Protection

Privacy protection is a fundamental principle that aims to develop and implement practices in AI systems to comply with regulations on data privacy and ensure the confidentiality of personal information.

Principle #4: Fairness and Inclusion

AI systems need to be developed in a way that avoids potential biases and discrimination.

To achieve this, data scientists and AI engineers must ensure, through appropriate checks, that all data used for algorithm training is diverse, representative, and free from biases based on gender or race.

Principle #5: Accountability

Accountability in the context of artificial intelligence involves taking responsibility and justifying the results and potential impacts of an AI system on both individuals and society.

It also includes the ability to intervene and correct a deviating system promptly.

Now that we've covered the basic principles of Responsible AI, let's see how it can be effectively implemented by a business.

How Can Responsible AI Be Implemented by a Business?

The application of Responsible AI and its basic principles varies from one business to another.

However, some of the key practices that a company can follow to implement Responsible AI include:

Practice #1: Build a Diverse Data Team

In the initial stages, a fundamental practice for a company is to create a diverse data team.

To ensure that AI products and services are not biased, it is crucial to be developed by a diverse team of data scientists, machine learning engineers, and business leaders, among others.

Practice #2: Provide Continuous Training to Your Personnel

Continuous training of your personnel on the basic principles of Responsible AI is an equally good practice for promoting transparency in creating a responsible and ethical artificial intelligence system.

By educating your company's members and adopting a data-driven work culture, your staff remains informed about the regulations that need to be followed regarding the ethical use of data, laying the essential foundations for Responsible AI.

Practice #3: Conduct Regular Audits

Finally, it is essential to systematically monitor AI models even after their development and perform regular audits and tests to minimize errors, false positives, and biases.

Ramping Up

We’ve seen in detail what Responsible AI is, its significance, and how it can be implemented by a business.

As mentioned earlier, with the responsible use of AI, businesses can significantly enhance the trust of their users and their reputation, distinguishing themselves from the competition.

In recent years, the field of artificial intelligence has had a particularly noticeable presence and is continually evolving.

So, if you are excited and want to read more about AI and Data Science, follow us for more educational articles!

.jpg)

.jpg)